Remember your first AI chatbot? The one that could handle maybe three questions before desperately routing you to a human agent? That was 2022.

Today, AI generates photorealistic videos from text prompts. It orchestrates dozens of autonomous agents working in concert. It builds complete marketing campaigns from a single brand guidelines PDF.

We crossed that distance in twenty-four months.

2025 will always be remembered as the year AI stopped being a feature and started being the infrastructure. The year when "Can it do X?" became "How many can we deploy?" This shift matters because what happens in 2025 doesn't stay in 2025. These trends are foundation-setting, the kind that reshape how we work, create, and connect with audiences for years to come.

If you're wondering what to expect in 2026, the answer is already taking shape. Here are the ten trends that defined 2025 and will dominate your 2026 roadmap.

1. Agentic AI: When Software Learns to Think Ahead

The term "agentic AI" went from conference buzzword to board-level priority in record time. Unlike traditional AI that responds to prompts, agentic systems plan, act, and iterate across multiple tools and data sources. If you want to know what agentic AI actually is, read our in-depth primer on the concept, but the long and short of it is basically this: it’s a new class of AI that acts more like autonomous digital teammates.

Microsoft made the boldest bet at Ignite 2025 with Agent 365, a platform designed to deploy and manage AI agents across Word, Excel, Outlook, and Windows. They're not shy about the vision: agents as "the apps of the AI era," with projections of over 1.3 billion agents by 2028. Every major vendor from Google, Amazon, and yes, the LLM providers, are re-architecting products around agents instead of single API calls.

What marketers should expect: Think of agentic AI as your always-on campaign optimizer that doesn't need you to check in every hour. These systems can monitor performance, adjust bids, pause underperforming creative, and draft new variants without human intervention.

The catch? You'll need to get comfortable with setting guardrails and success metrics upfront, then trusting the agent to execute. In 2026, expect your martech stack to include agent orchestration tools that let you manage dozens of specialized agents—one for email sequences, another for social listening, another for competitive intelligence.

Marketing skills will soon shift from doing the work to designing the system.

2. Multimodal Models: Beyond "Can Look at Images"

2025 was the year multimodal stopped being a checkbox feature and became native, reasoning-level intelligence. We're talking about models that process text, images, audio, video, charts, and UI screens simultaneously: not as separate inputs but as integrated understanding.

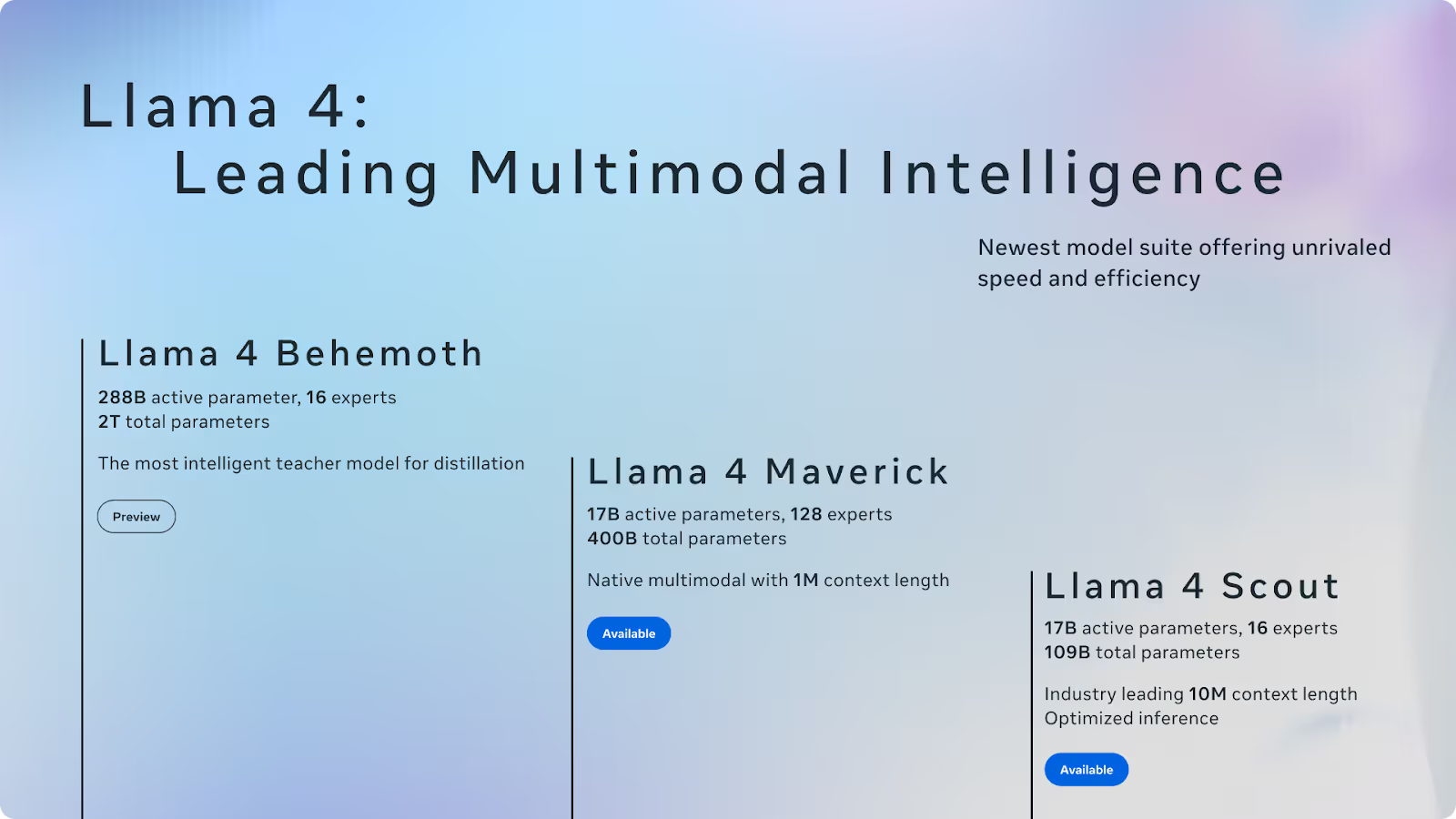

Meta's Llama 4 lineup (Scout, Maverick, Behemoth) shipped with explicit multimodal capabilities across all those modalities. Google delivered multimodal AI like Gemini 3 that can process documents, visuals, and live interactions in a single workflow.

On the research side, we saw a flood of specialized benchmarks: the MARS2 2025 Challenge for multimodal reasoning at ICCV, new techniques for representation learning and fusion across modalities, and domain-specific benchmarks like MEDVQA-GI for gastrointestinal imaging.

Gartner's 2025 AI Hype Cycle named multimodal AI as one of the top innovations expected to hit mainstream adoption within five years. That timeline is probably conservative.

What marketers should expect: Your creative brief just got a lot more complex—and a lot more interesting. Multimodal models mean you can feed in a product image, a brand video, customer service transcripts, and sales data, then ask the AI to generate campaign concepts that synthesize all of it.

The 2026 use cases will be incredibly specific: multimodal models that can identify messaging patterns through ads or models that turn podcast content into social clips while maintaining speaker intent. The horizontal "do everything" models exist now. The vertical, use-case-specific applications are what 2026 is about.

3. AI-Native Apps: When the Assistant Becomes the Platform

We're witnessing a fundamental shift from "AI features inside apps" to apps that are AI. This isn't subtle. The entire UX paradigm is changing.

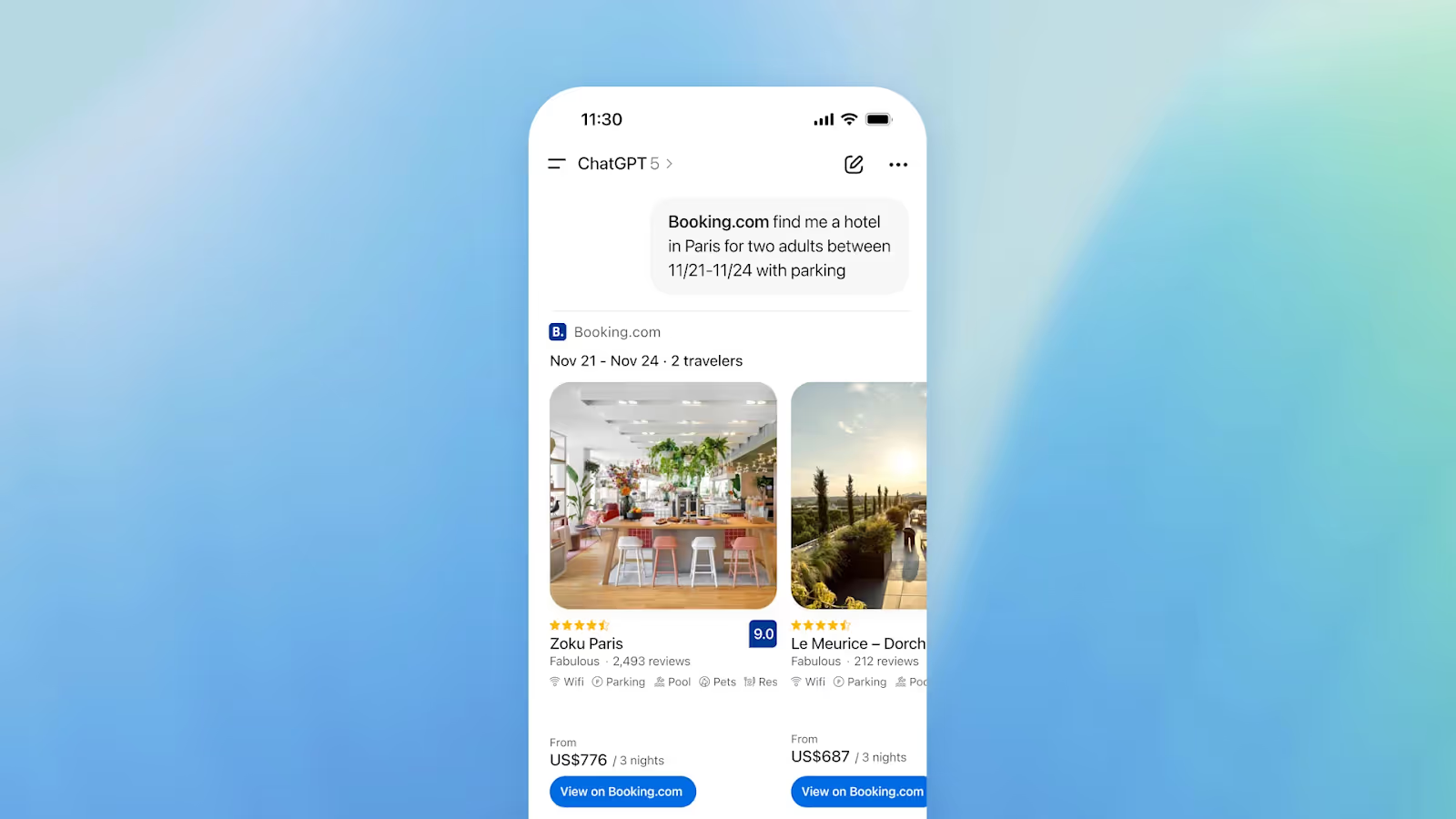

In October 2025, OpenAI launched a full app ecosystem inside ChatGPT, powered by the Apps SDK and built on the Model Context Protocol (MCP). Apps like Booking.com, Canva, Spotify, and Zillow now exist as conversational experiences within ChatGPT, with monetization and deeper partner integrations coming. Meta launched a standalone AI app with voice-first interaction and a Discover feed for exploring and remixing prompts, integrated with Ray-Ban smart glasses.

These aren't wrappers around existing web apps. They're AI-only experiences where every interaction—from UI to routing to data retrieval—is built around LLMs and agents.

What marketers should expect: You're going to need an AI app strategy the same way you needed a mobile app strategy in 2010. The difference is speed.

In 2026, major platforms will launch "AI app stores" with discovery, ranking, and monetization. If you're a brand, you'll need to decide: do we build a conversational app that lives inside ChatGPT or Meta AI? Do we integrate with agents via MCP so they can access our product catalog?

The early movers will own the high-ranking positions. The late movers will scramble to be found. Start prototyping now, even if it's just a basic "book a demo" agent, because the land grab is coming.

4. Infinite AI Feeds: Content Without Creators

Meta's Vibes is the clearest signal of where this is headed. It's a TikTok-style feed of entirely AI-generated short-form video—no human creators, no uploads, just an endless stream of AI-made clips you can watch, remix, and share to Instagram or Facebook. Launched as part of the Meta AI app and available at meta.ai, Vibes is basically an always-on generative content firehose.

Meta's AI site also pushes creation flows that let users generate, remix, and share AI images and videos in one place. The content never stops. The cost approaches zero.

What marketers should expect: When platforms start serving infinite AI-generated content, your organic reach problem gets exponentially harder. Why would Meta show your brand post when it can generate perfectly optimized content for each user on the fly?

The counterintuitive opportunity: paid media becomes more effective because human-created, brand-backed content will be a signal of quality and authenticity. In 2026, expect to see "verified human content" badges and ranking algorithms that explicitly reward genuine creation over synthetic remixes.

Your strategy should split: invest in high-quality, undeniably human storytelling for brand building, and use AI-generated content for testing, iteration, and volume plays.

The middle ground—mediocre human content—will get buried.

5. GenAI-First Advertising: When the Machine Makes the Creative

Meta is the bellwether here. They've deployed Andromeda, a new personalized ads retrieval engine running on NVIDIA Grace Hopper, designed to massively scale machine learning in the ads stack. At Cannes 2025, they announced new generative tools that automatically create brand-consistent ad creatives inside Advantage+, with 70% year-over-year growth in Advantage+ Shopping campaigns.

This is AI doing two jobs: optimizing targeting and bidding, and producing the actual ad assets from brand inputs. McKinsey's Technology Trends Outlook 2025 highlights GenAI in marketing, plus application-specific AI semiconductors, as the keys to making this economical at scale.

What marketers should expect: Performance marketers care about ROAS, not ideology. As soon as AI creatives and AI bidding beat human-only setups on cost per result, budgets lock in.

You're already seeing it: upload a brand kit, get 1,000 variant ads optimized for different audiences, placements, and contexts. In 2026, expect this to expand across Meta, Google, Amazon, and TikTok with deeper brand safety controls and style customization.

Your creative team needs to shift from making ads to making systems. The new skill is designing brand kits, style guides, and creative frameworks that AI can execute at scale. Think of it as going from artisan to architect.

6. Efficient Foundation Models: The Economics of Scale

As models and usage explode, 2025 saw a massive push toward efficiency. Sparse Mixture-of-Experts (MoE) is becoming standard—only a subset of experts fire per token, dramatically cutting inference cost. Time-MoE, heavily cited research from 2024, pushes this approach to billion-scale time-series forecasting.

Apple's 2025 foundation models are a perfect example: a roughly 3-billion-parameter on-device model plus a larger server MoE model tailored for Private Cloud Compute. Small local model plus big MoE in the cloud, by design. McKinsey's 2025 tech trends report treats application-specific semiconductors and energy-efficient AI as core enablers for future AI workloads.

What marketers should expect: Inference cost is now a board-level conversation. That matters for you because cheaper inference means more experimentation. In 2026, you'll run more tests, generate more variants, and personalize at finer granularity than ever before, without blowing your budget.

Hybrid stacks (small local model for fast, private interactions plus large cloud model for complex reasoning) will become standard in martech. Expect tools that let you preview content on-device before sending anything to the cloud, preserving customer privacy while maintaining speed.

The economic constraints that made AI feel expensive in 2023 are disappearing. Use that freedom wisely.

7. Vertical AI: Where the Real Differentiation Happens

2025 was packed with research showing that horizontal LLMs are table stakes. The action is in verticalized models. Healthcare saw multimodal VQA models for gastrointestinal diagnostics (MEDVQA-GI 2025) and models predicting clinical outcomes of drug combinations from preclinical data. Software engineering got multimodal GenAI using code, backlog text, and UI artifacts for story point estimation. Time-series forecasting saw foundation models like Time-MoE designed for large-scale, industry-specific predictions with efficient MoE architectures.

Gartner's AI and GenAI Hype Cycles both call out vertical AI and domain-specific models as moving quickly toward impact.

What marketers should expect: Generic "ChatGPT for marketing" tools will flood the market in 2026, but the winners will be hyper-specific. Think: AI trained exclusively on e-commerce checkout flows to optimize conversion copy.

AI trained on restaurant reviews to generate menu descriptions that drive orders. AI trained on SaaS onboarding emails to reduce churn. If you're in a regulated industry—healthcare, finance, legal—expect vertical models with built-in compliance and safety layers.

The differentiation comes from data plus domain adaptation. If you have proprietary customer data, training vertical models on that data will be your moat. Partner with vendors who understand your vertical deeply, not ones offering one-size-fits-all solutions.

8. AI at the Edge: Phones, Glasses, and Ambient Assistants

Apple's 2025 models are explicitly tuned to run efficiently on Apple silicon, with a small on-device foundation model plus a bigger server MoE via Private Cloud Compute—privacy and latency by design. Meta's AI app is tightly integrated with Ray-Ban smart glasses: start a conversation on the glasses, continue it on your phone or desktop. The app replaces the old Meta View app and becomes the central hub for captured media plus AI interaction.

This is the first real step toward ambient AI—assistants that live across your devices instead of in a single chat window.

What marketers should expect: On-device AI changes the privacy equation. When processing happens locally, customers are more comfortable sharing data. In 2026, expect marketing use cases like voice assistants in smart glasses that can answer product questions without an internet connection, and real-time translation for in-store experiences.

The latency-sensitive stuff—assistive tech, AR overlays, safety features—will all shift to edge inference. For marketers, this means rethinking what "online" means. Your AI experiences need to work offline, on-device, and across a customer's entire device ecosystem. The old model of "visit our website" feels increasingly dated.

9. AI Safety and TRiSM: Trust as a Product Feature

Gartner's 2025 work on AI TRiSM (Trust, Risk, and Security Management) positions it as a top-priority innovation, with layered enforcement across policy, runtime validation, monitoring, and incident response. Their Hype Cycles for AI and GenAI explicitly highlight AI trust and safety alongside multimodal models as key innovations. Recent posts from major AI labs on external red-teaming and evaluation frameworks underscore a move toward standardized safety practices.

Survey work on agentic AI dedicates significant space to governance, with widely cited research on autonomous agents and practices for governing agentic systems.

What marketers should expect: Regulators, large enterprises, and insurers are converging on the idea that you need auditable safety, bias, and robustness layers to deploy GenAI at scale. In 2026, you'll see more RFPs demanding explainable evaluation and logging.

Expect vendors selling "TRiSM platforms" that sit between your martech stack and your AI models, monitoring every interaction for compliance, bias, and brand safety. This isn't just a legal checkbox—it's a competitive advantage. Brands that can demonstrate trustworthy AI use will win customer confidence.

Build your safety and evaluation processes now, even if they feel like overkill. When something goes wrong—and eventually it will—your response time and transparency will define your reputation.

10. Open Standards: The Protocols That Connect AI to Everything

The Apps SDK from OpenAI is built on the Model Context Protocol (MCP)—an open standard for connecting models to tools, data sources, and full conversational apps. The same ecosystem is introducing the Agentic Commerce Protocol, aimed at standardizing how agents perform secure, instant checkout actions.

This is subtle but critical. Instead of every company building proprietary plugin mechanisms, we're starting to see shared standards for how agents talk to APIs, UIs, and payment systems.

What marketers should expect: If agents are going to act on your behalf—book flights, move money, edit files—you need interoperable, audited protocols. In 2026, expect more MCP-compatible runtimes from multiple vendors, competing but overlapping standards from big tech, and standardized "capability manifests" for what an agent is allowed to do.

For marketers, this means: build your integrations on open standards, not proprietary APIs. When you choose martech vendors in 2026, ask if they support MCP or similar protocols. The ones that do will integrate seamlessly with every AI agent your team uses.

The ones that don't will become expensive, isolated silos. Interoperability isn't sexy, but it's the difference between a connected AI ecosystem and a Frankenstack.

What This Means for 2026

These ten trends aren't predictions—they're already happening. The infrastructure is live. The research is published. The products are shipping. What changes in 2026 is scale and specificity. Agents go from dozens to millions. Multimodal models go from demos to production. AI-native apps go from experiments to primary channels.

Your job isn't to adopt all of this at once. It's to choose the one or two trends that will transform your business and commit fully. Dabbling doesn't work anymore. The gap between early movers and late movers is widening fast, not because the technology is inaccessible but because the learning curve is real and the network effects are brutal.

2025 built the foundation. 2026 is when we build on it. Choose your ground carefully.